Deploying a Highly Available ForgeRock Stack on Kubernetes

- Juan Redondo

- Apr 22, 2020

- 3 min read

Updated: Sep 19, 2025

For this blog, I am sharing my experience of deploying #ForgeRock #accessmanager and #directoryservices in a highly available manner onto a #Kubernetes cluster.

Now that we have explained how our accelerator implements the CIA triad for our sensitive data in a previous post, it is time to move on to configure our ForgeRock stack to achieve this same practice at an application level. Let's assume that the target architecture to be deployed is the following one:

As you can observe, each of the components is deployed in HA, with DS instances making use of the replication protocol that can be easily enabled in our accelerator during deployment and AM using the clustering configuration (site) that ForgeRock offers.

You should also note that DS instances do not automatically scale horizontally due to their stateful nature (they are deployed as stateful sets), whereas AM deployment can be configured to auto-scale based on incoming load traffic, due to its stateless nature.

The end state of our Kubernetes cluster should look like the following:

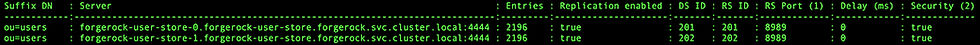

We can also verify that automatic self-replication has been enabled for our DS instances. We can use the DS user-store pods as an example to check this feature:

As observed, the two configured user-store pods are replicating all the data from the ou=users LDAP branch, which holds all the user entries that have been created during registration in AM.

It is important to mention that our ForgeRock accelerator will also take care of the hosts naming convention of the DS instances (userstore,tokenstore,configstore) which will be based in the number of replicas specified beforehand while configuring the deployment. This ensures that all the replicas have connectivity between them so they can self-replicate the data.

The next step is to verify how the AM clustering configuration has been applied, considering that there are some caveats while deploying AM in HA, mainly related to the use of secrets on a clustered environment (https://bugster.forgerock.org/jira/browse/OPENAM-14771).

The bug that the ticket describes will cause the following Catalina stack trace on your secondary AM instance once scaled:

This exception is caused by the fact that during the deployment of the primary AM instance, AM will create a /secrets/encrypted/storepass and /secrets/encrypted/entrypass entry, containing randomly generated hashes to access the AM keystore and keystore aliases, respectively.

These entries are not automatically created on the secondary server, thereby preventing AM from:

opening the keystore

using any of the aliases to sign any token/assertion issued to that AM instance

This also results in an exception being thrown when trying to access the JWK_URI endpoint for token verification in all the secondary AM instances:

In a VMs world, the solution for this issue will be to copy the keystore files and password entries (/secrets/encrypted/storepass and /secrets/encrypted/entrypass) of the primary instance to all the secondary instances, including a restart of the secondary instances, so that the new keys can be retrieved by AM during start-up. This can all be easily automated using an IT automation tool such as Ansible.

In Kubernetes, this operation is a bit more complex since restarting the container can cause the main process of the pod to be killed, and hence the pod will remain in a continuous “Error” status.

Our accelerator has been designed to take care of this issue by storing and retrieving the keystore files and password entries during runtime, with an automatic management of the secondary AM servers restart, so that once you configure the desired number of AM replicas during deployment, you do not need to worry about keys alignment and can instead focus on using the features ForgeRock can offer!

I hope this solves a few frustrations for those trying to resolve high availability issues when deploying ForgeRock on Kubernetes.

Writer’s Overview – Juan Redondo

Juan Redondo – Co-Founder & Head of Identity, Midships

Juan is a certified IAM specialist with 12+ years of experience architecting CIAM platforms for global banks and retailers. He leads the Identity practice at Midships, blending deep product knowledge with hands-on delivery in complex environments.

Short bio: Juan brings technical excellence in Ping, ForgeRock, and Kubernetes, delivering scalable, secure identity solutions from concept to production.

Comments